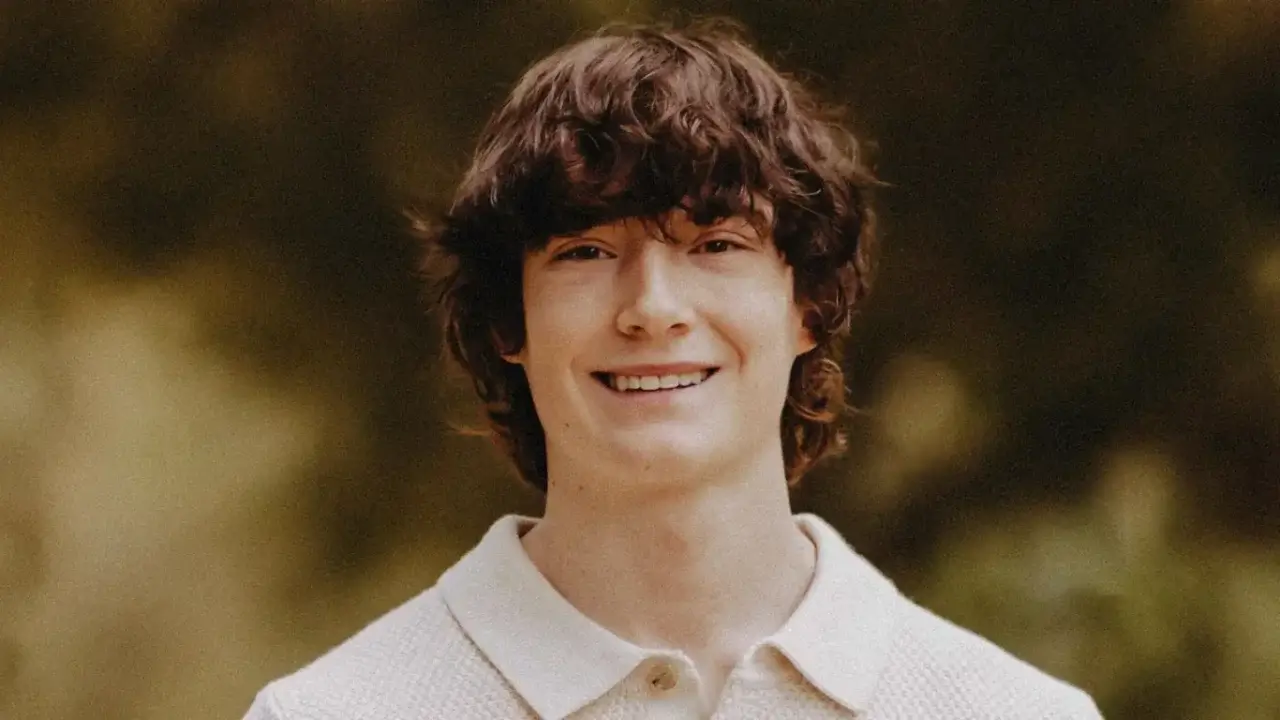

Parents of deceased teen: AI safety measures are not enough

In California, following the death of a 16-year-old teenager, his parents filed a lawsuit against ChatGPT developer OpenAI. They claim the chatbot encouraged their child to take his own life.

After the incident, OpenAI announced it would introduce new parental controls. Parents will now be able to link to their child’s account, restrict certain features, and receive notifications if the system detects the teen is in “severe emotional distress.”

However, the parents of the deceased and their lawyer consider these measures insufficient. According to them, OpenAI is trying to change the subject instead of solving the problem, and the chatbot should be temporarily suspended.

OpenAI admitted that in some cases the system did not function as expected and stated that additional mechanisms are being developed with experts to support young people’s mental health.

Read “Zamin” on Telegram!