Medical and Legal Counseling in ChatGPT suspended

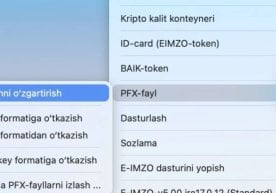

The ability to receive individual medical or legal advice through the popular artificial intelligence chatbot ChatGPT has been restricted in a new way. OpenAI updated the rules for using this service, closing the path to providing personal medical or legal advice to citizens without the participation of licensed specialists.

Based on the changes made:

According to the company's statement, incorrect recommendations regarding problems related to human health or legal dignity can lead to serious negative consequences.

Therefore, ChatGPT can only provide general information. For example, it answers general questions such as "what is this legal concept?" or "what steps are often taken in this case?," but does not provide confidential recommendations appropriate to a specific case.

Access to the service is limited to: individual drives in the fields of education and housing; personal decisions related to insurance, immigration, and public services; personal financial or legal advice.

In addition, it is strictly prohibited to use the service to promote suicide, threats, aggression, extremism, sexual violence, and gambling.

The company introduced the new rules to ensure user safety and to be a measure against the possibility of incorrect or erroneous use of artificial intelligence.

Like any service, ChatGPT is considered a very powerful tool in terms of content. However, it does not replace a licensed medical professional or lawyer. If you have a personal case about the problem, it is always the right way to contact a specialist in the relevant field.

These changes encourage people to be more cautious when using artificial intelligence tools - while it may provide quick answers to stamped questions, it once again reminds them that human expertise is necessary for important decisions.

This update is seen as an important step towards balancing responsibility and security in the technology and civil spheres.

Read “Zamin” on Telegram!