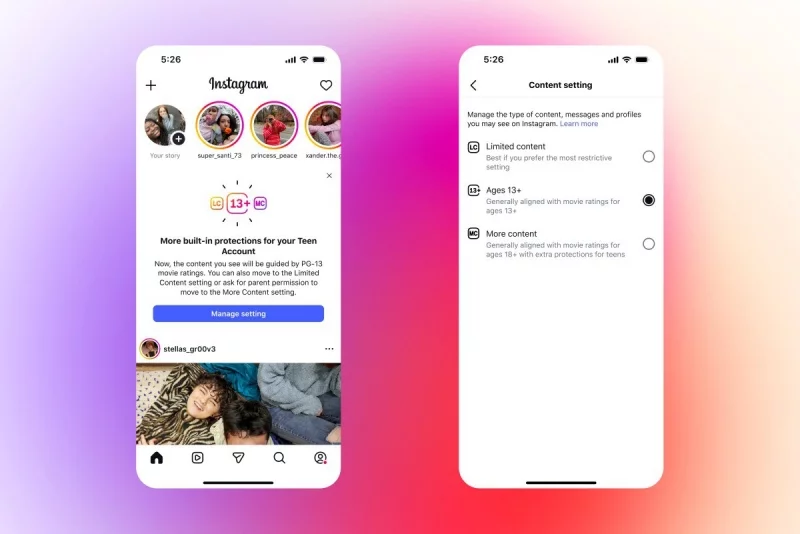

Instagram is introducing new restrictions to ensure the safety of teenagers, reports TechCrunch. From now on, content for users under 18 will be filtered according to the PG-13 rating — meaning they will not be able to view materials related to violence, explicit scenes, or drugs.

According to the company, users will not be able to change these settings on their own — only parents or guardians can modify them with permission.

In addition, the social network is launching an even stricter filter called “Restricted Content.” It will prevent teenagers from viewing certain posts or leaving comments under them.

Starting in 2026, Instagram plans to apply this filter to AI (artificial intelligence) chats as well. This means that when a young user communicates with an AI, they will also be prevented from seeing age-inappropriate content.

The company will also protect teenagers from accounts that post unsuitable material. If a user under 18 subscribes to such an account, they will not be able to see its posts, interact with it, or find it in recommendation lists.

For now, these updates are being rolled out in the United States, the United Kingdom, Australia, and Canada. The company stated that the new features will be implemented globally next year.

Read “Zamin” on Telegram!